- #94fbr enroute 4 how to

- #94fbr enroute 4 manual

- #94fbr enroute 4 full

- #94fbr enroute 4 software

- #94fbr enroute 4 free

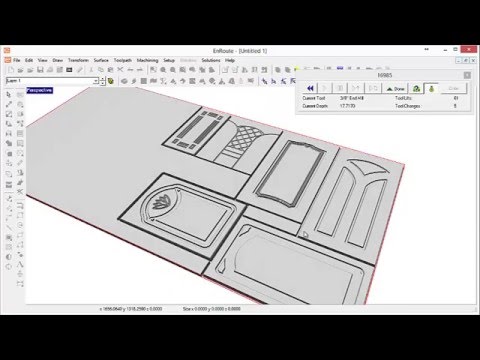

I was so happy I’d found it, almost from the very first part I made on my CNC. For most of us, the end result from CNC comes out looking much nicer, and with much less effort.

#94fbr enroute 4 manual

But CNC is fabulously more productive, especially if you don’t have the years of experience that make a good manual machinist the consummate craftsman that he is. I love a sweet Monarch 10EE as much as the next guy.

#94fbr enroute 4 software

In CNC alone, CNC Software can be completely transformative to your productivity in ways that are not even dreamed of with manual machining.Ī simple analogy makes it easier to understand why Digital Tooling so important for CNC:ĬNC is to Manual Machining as a Word Processor is to a Typewriter.ĬNC is to manual machining as word processing is to a manual typewriter. As usual, it pays to have better tooling, but with CNC it is especially important to have good cnc softwrae. With CNC, your digital tooling will have a bigger productivity impact than any of your “real” tooling.

#94fbr enroute 4 free

Or, in another case, they are proud that they have a name brand and not a clone Haimer 3D Taster or Blake Coaxial Indicator, but they want a cheap or free piece of CNC Software. It doesn’t take many cutters saved for very long to completely pay for that feeds and speeds calculator! I’ve talked to machinists who spend hundreds on custom ground cutters and want to preserve their life as long as possible, but balk at $79 for a sophisticated feeds and speeds calculator that is the key to extending that tool life. Some machinists are funny about Digital Tooling. There are many other kinds of CNC software, which I’ve taken to calling “Digital Tooling”, that are important to CNC work.ĭigital Tooling: We need CNC Software as much as hard tooling to be successful CNC’ers… G-Code editors and feeds and speeds calculators were not far behind. At the very least, I needed a CAD program to make drawings which I would then feed to a CAM program to generate the gcode needed to be really productive with the machine. But suddenly, I had a whole new category of tooling to add. Though I might like to have a 4th axis, I didn’t need a rotary table, for example. NoIndex : noindex directive is a meta tag value.Then I went CNC, and I started noticing a lot of that tooling wasn’t needed any more.

(Changing domain or site blocked or dysfunctional).Ĥ) User may behind some firewall or alike software or security mechanism that is blocking the access to the destination website.ĥ) You have provided a link to a site that is blocked by firewall or alike software for outside access. (A common 404 error).ģ) The destination website is irreversibly moved or not exists anymore. All are listed below.Ģ) The destination website removed the linked web page given by you. There are several reasons for broken link. it also has a bad impact on user experience. a higher rate of broken links have a negative effect on search engine ranking due to reduced link equity. Broken link: a broken link is an inaccessible link or url of a website. if you have more uls than this create multiple sitemap files and use a sitemap index file.Ģ) Put your sitemap in website root directory and add the url of your sitemap in robots.txt.ģ) sitemap.xml can be compressed using grip for faster loading. You can create a sitemap.xml by various free and paid service or you can write it with proper way (read about how write a sitemap).ġ) Sitemap must be less than 10 MB (10,485,760 bytes) and can contain maximum 50,000 urls. it can help search engine robots for indexing your website more fast and deeply.

It is used to include directories of your websites for crawling and indexing for search engine and access for users.

#94fbr enroute 4 full

Sitemap is a xml file which contain full list of your website urls. Remember robots.txt instruction to restrict access to your sensitive information of your page is not formidable on web page security ground. Write it properly including your content enriched pages and other public pages and exclude any pages which contain sensitive information. So add a robots.txt file in your website root directory. Your website directories will be crawled and indexed on search engine according to robots.txt instructions. A full access or a full restriction or customized access or restriction can be imposed through robots.txt. robots.txt contains the search bots or others bots name, directory list allowed or disallowed to be indexing and crawling for bots, time delay for bots to crawl and indexing and even the sitemap url.

#94fbr enroute 4 how to

Robots.txt is text file that reside on website root directory and contains the instruction for various robots (mainly search engine robots) for how to crawl and indexing your website for their webpage.

0 kommentar(er)

0 kommentar(er)